"The use of meat juice for medicinal purposes is a growing one, and is recommended for the aged, also delicate invalids, and for invalids, in all cases where complete nourishment is required in a concentrated form. The meat to be operated upon should merely be thoroughly warmed by being quickly broiled over a hot fire, but not more than to simply scorch the outside, and then cut in strips. The yield should be about six (6) ounces from one (1) pound of round steak. Only tepid water may be added, as hot water will coagulate the meat juice. Season to taste. The machine being tinned, no metallic or inky flavor will be imparted to the material used. The dryness of the pulp or refuse can be regulated by the thumb-screw at the outlet." (The Manufacturer 7, no. 26 (1894), 10)

Nourishment in concentrated form for the aged, delicate invalids, and (unqualified, presumably indelicate?) invalids! This reminded me of something that my mother once told me about one of her own childhood spells as a delicate invalid; she grew up in a little town on the Argentine pampas during the 1940s and 50s. I called her up and asked:

Me: Mom, what was that thing you once told me about how you had to drink meat juice...?

Mom: Oh, yes, when I was very sick with hepatitis. Nona would make this. She put a piece of filet mignon in the machine, and it would squeeze it, squeeze it, and the juice would fill a bowl. And the filet mignon afterwards was like a cardboard.

Me: And you would drink this??

Mom: No, you did not drink it raw! You warmed it in a bain marie, with some salt and pepper. Swirl it, swirl it until it is hot - and then you drink it.

Me: What was the machine?

Mom: It was like a press - it had two flat plates, metal.

Me: Where was this meat press machine? In the kitchen? Did Nona buy it specifically to make this?

Mom: Yes, she bought it specifically. It was very common. At this point, meat in Argentina was very cheap. It took two filets to make five ounces of liquid. You know how expensive that would be here!?

The machine my mother describes doesn't seem exactly equivalent to the Enterprise Manufacturing Company's model - which appears to be more like a masticating juicer than a "press." But the two seem similar enough, and they share a common purpose: the domestic production of a special restorative diet for the enfeebled.

But why meat juice? How did this become a therapeutic food?

There's a long tradition of prescribing aliment as a treatment for particular ailments. Galenic medicine used food to recalibrate the body's four humors, whose imbalances were thought to cause disease. There's also a long tradition in the West of associating meat-eating with masculine vim and vigor. Some of this back-story certainly shapes the widely held belief that meat is "strengthening" and "restorative." But a steak is materially different than its liquid runoff. How did people come to believe that the liquid squeezed out of meat contains the vital essence of the food, and not the substantial stuff that's left behind?

Part of the answer to this question can be found in the South American Pampas of 1865 -- specifically, Fray Bentos, Uruguay, home of Liebig's Extract of Meat Company. (You can find another version of this story at the Chemical Heritage Foundation magazine.)

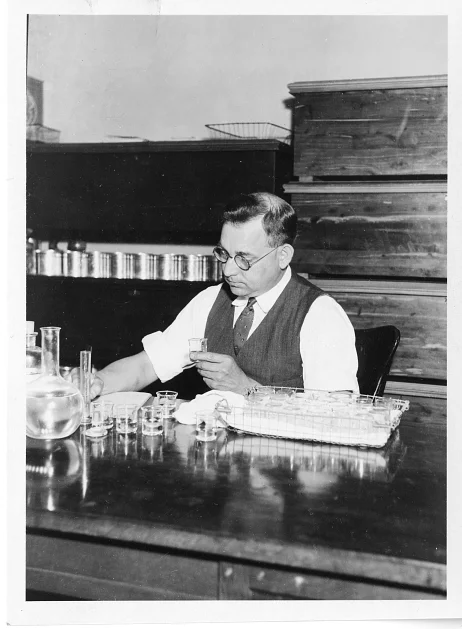

The company bears the name and the imprimatur of Baron Justus von Liebig (1803-1873), a Hessian, one of the pioneers of organic chemistry and of the modern chemical laboratory. Beginning in the Enlightenment, life processes (circulation, respiration, digestion) were investigated as physical and chemical processes, and one of the central questions for chemists was this: how does food become flesh? The answer to this was to be found not by alluding to some invisible vital force, but by careful analysis and quantification: calculating measurable changes in mass and energy, using tools like balances and calorimeters and conducting experiments with dogs and prisoners on treadmills. Chemists like Liebig engaged in a kind of nutritional accounting, identifying and quantifying the components of food that make life, growth, and movement possible.

This new way of thinking about food and bodies had consequences. It became possible to imagine a "minimal cuisine" - food that's got everything you want, nothing you don't. This was important and desirable for various reasons. The Enlightenment marked the emergence of the modern nation-state, which was responsible for the well-being of its population in new ways. Industrialization displaced rural populations, creating desperate masses of urban poor who were not only pitiable, but were also potential insurgents. Modern wars and colonial ventures meant provisioning armies and navies. There was an urgent and visible need for food that was cheap, portable, durable, its nutritional and energetic content efficiently absorbed to fuel the calculable energetic needs of soldiers and workers.

I won't go into to much detail about the chemistry (you can find a substantial account of the history of nutritional chemistry here), but Liebig, in the 1840s, believed that (Nitrogen-containing) protein was the key to growth; fats and carbohydrates did nothing but produce heat. In his monumental 1842 tome, Animal Chemistry, or Organic Chemistry in its Application to Physiology and Pathology, he analyzed muscle, reasoning that protein is not only the substance of strength but also its fuel. An extract that concentrated the nutritional virtues of beef muscle fibers, then, could be the perfect restorative food.

This led him to develop a formula for his meat extract -- a concentrated "extract" of beef that promised to solve the growing nutritional crises of modernity. Imagine how much simpler it would be to provision an army when 34 pounds of meat could be concentrated into one pound of virtuous extract, which could feed 138 soldiers! No more bulky chuckwagons or questionable rations of salt pork and hardtack! Plenty of concentrated food for the poorhouse! Moreover, Liebig certainly believed in the healing power of meat extract. When Emma Muspratt, the daughter of his close friend James, a British chemical manufacturer, fell ill with scarlet fever while visiting the Liebigs in Giessen in 1852, Liebig, desperate to restore the failing girl to health, spoon-fed her on the liquid squeezed out of chicken. She survived.

However virtuous, Liebig's meat extract was too expensive to produce in Germany. In a public gesture that was only partly an act of self-promotion, Liebig offered his idea to the world, vowing to go into business with anyone who could make it happen. It would be nearly twenty years before someone took him up on it.

This brings us back to the South American pampas, where the missing ingredients in Liebig's formula could be found: cheap land, cheap cows, and ready access to Atlantic trade routes. A fellow German (or possibly Belgian), Georg Giebert, wandering the plains of Uruguay noticed that the herds of grazing cattle rarely became anyone's dinner. Their valuable hides were tanned and turned into leather, but the carcasses were left to rot. Wouldn't it be great, Giebert wondered, if there were a way of using that meat, salvaging it by concentrating its nutritional value into easy-to-export extract?

Entering into partnership with Liebig, Giebert established a vast factory at Fray Bentos, where the meat was crushed between rollers, producing a pulpy liquid that was steam-heated, strained of its fat content, and then reduced until it became a thick, mahogany goo that was filtered and then sealed in sterile tins. Extractum Carnis Liebig - Liebig's Extract of Meat - first hit Europe in 1865 and was initially promoted as a cure for all-that-ails-you. Typhus? Tuberculosis? Heavy legs? Liver complaint? Nervous excitement? Liebig's Extract of Meat is the medicine for you!

Then came the skeptics. Chemists and physicians could find very little measurable nutritional content in Liebig's Extract of Meat. Dogs fed exclusively on Liebig's extract swiftly dropped dead. As British medical doctor J. Milner Fothergill thundered in his 1870 Manual of Dietetics: "All the bloodshed caused by the warlike ambition of Napoleon is as nothing compared to the myriad of persons who have sunk into their graves from a misplaced confidence in beef tea."

But this did not sink Liebig's extract of beef or the factory in Fray Bentos. (It would take a salmonella outbreak in the 1960s to do that.)

As Walter Gratzer notes in his book, Terrors of the Table: The Curious History of Nutrition, Liebig changed his tactics in the face of his critics, downplaying the medical benefits of beef extract, and instead arguing that its use is "to provide flavor and thus stimulate a failing appetite." "Providing flavor," then, was an essential functional component of the food. But this applied to more than just those with "failing appetites." Liebig's Extract of Meat was a success for decades not because of its consumption by "delicate invalids" and the enfeebled poor who needed cheap nutrition, but by ruddy Englishmen and other gourmands, who used it as an additive to increase the "savour" of their cuisine.

Beef extract provided what the 19th-century French gourmandizing scientist Brillat-Savarin dubbed "osmazome," and what we would call "umami": the glutamate richness that connoisseurs relished before science gave it a name. As Brillat-Savarin writes, "The greatest service chemistry has rendered to alimentary science, is the discovery of osmazome, or rather the determination of what it was."

And the Chemical Heritage Foundation reprints an ad for Liebig's from their collection which emphasizes the appeal of beef extract to the gourmet, rather than to the invalid:

"NOTICE: a first class French Chef de cuisine lately accepted an appointment only on condition of Liebig Company’s Extract being liberally supplied to him.”

Instead of becoming a "minimal food," fulfilling the nutritional needs of humans in the simplest and most efficient way, beef extract became a flavor enhancer - without, however, completely losing its hold on the health-giving and restorative benefits that it initially claimed. This is why the meat juice extractor was manufactured, and why my mother drank warm meat juice to recover from a bout of hepatitis.

The question that haunts all of these investigations into minimal foods is the following: Is flavor a luxury, or is it a necessary component of foods? Some later nutritionists believed that the beneficial effect of meat extract was due in part to its flavor - or, more precisely, the effect the flavor had in "stimulating the appetite." In Dietotherapy, a 1922 nutritional textbook by William Edward Fitch (available free on Google), Fitch cites Pavlov's experiments as evidence that no substance is a greater "exciter of gastric secretions" than "beef tea."

As the blog for the (totally real, possibly not dystopian) "food" product Soylent puts it, "there is more to food than nutrition.... Even a product as minimal as Soylent must concern itself with the “hedonic” aspects of eating. These include, but are not limited to: appearance, taste, texture, and flavor / odor." (I'm definitely writing more about Soylent and flavor in a future post...)

Regardless of whether it is nutritionally adequate, lack of flavor or poor flavor can be a problem for food. The argument that prison loaf is torture is due in part to its total absence of "hedonic" qualities. However, not only can flavor preferences be debated, but the importance of flavor itself can be called into question. Many nutritional experts at the turn of the twentieth century prescribed mild, bland diets as the best for health and well-being; "highly flavored" foods, they cautioned, were hazardous, a cause of both obesity and its attendant diseases as well as emotional instability. And in our own cleanse-obsessed era, an appreciation of the bracing flavor of green juice or the intense bitterness of turmeric are signs of moral and physical enlightenment. Indeed, on the Soylent blog, the product's creators assure concerned readers that the inclusion of vanillin in the ingredient list is not to make "vanilla" soylent, but rather to offset the "bitter and fishy" flavors of other ingredients. The stated goal is to make the flavor of Soylent "pleasant without being overly specific."

And on that note... enjoy this gorgeous collection of Liebig extract of beef chromolithographed trade cards.

![The Crocker-Henderson Odor Classification Set, sold by Cargille Scientific Supply. From Alden P. Armagnac [yes, that is a real name!], "What Is a Stink?" Popular Science, March 1949, p. 147. An amazing article that has some more background…](https://images.squarespace-cdn.com/content/v1/520a3a3ee4b04f935eefceef/1408987898623-BT0VAU73A3UVIPNC1BMA/Crocker-Henderson+Odor+Classification+Set)